Intel's AI an Abuse Antidote?

- trustmustbeearned

- Oct 10, 2019

- 4 min read

Date: Oct. 10, 2019

Subject: AI’s Applicability to Enhancing Social-Media’s Abusive Actors’ Behavior

To: Andy D. Bryant, Chairman of the Board Peng Bai, Corp. VP, Logic Technology Development Daaman Hejmadi, Corp. VP, Infrastructure and Platform Solutions Group Isic Silas, Corp. VP, Client Platforms Program Office Kalyan Thumaty, Corp, VP, Product Enablement Solutions Group Anna Bethke, Intel, Head AI for Social Good

While listening to an NPR MarketPlace pod cast for Oct. 8, I was interested in a segment that they did on Intel’s efforts to develop AI (artificial intelligence) applications that could be used to confront Bullying, Hate Speech, and other abusive practices that occur on most if not all social media platforms. This is not especially unusual for a technology company like Intel to be exploring developments that can help address problems that are not just rampant in the media and social media industries, but that those technology and platform companies are working to address themselves. Consider Facebook, Twitter, WhatsApp, Instagram, ASKfm, and others are working to provide protections against various abuse situations; at least that what they tell politicians and regulators.

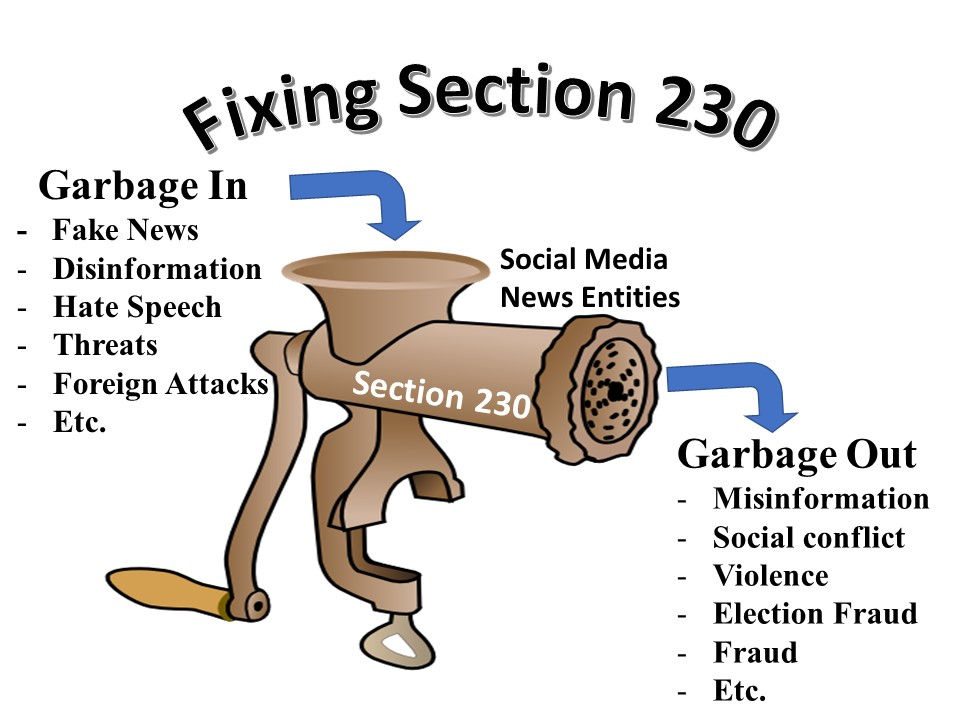

This is where AI comes into the equation. Identifying and restricting abuse situations/incidents today is still very human labor intensive. So, developing AI applications that can do it faster and/or better would provide several benefits to the technology companies, platform providers, regulatory entities, business clients, and users/user-communities. It makes perfect sense for those involved in the industry whether technology company, service provider or clients that depend upon the media & social-media businesses for their businesses to seek solutions to the problems. These problems can be created by both anonymous and known users who are the creators of bulling, hate speech promotion, harassments, fake news disseminations, spam, hackings, …, and other entities that cause social issues for users of all these services and platforms.

The nice thing about researching what AI can do and how it can benefit these businesses, is that it can be much more economically effective than what exists today. And more importantly since it’s in-progress research it provides a prophylactic argument against regulatory action. The unfortunate aspect of relying upon the promise of AI, is that it can allow solutions that would be effective now from being ignored as the promise is yet to deliver anything as effective as what can be done today and improved with AI without delay. What’s the trade-off between getting 80% of the problem solved now versus getting 95+% solved an indeterminate number of years down the proverbial road?

Intel could provide a much more effective and capable solution to handling abusive users’ behavior on any or all social-media, media or other applications that connect users/communities by incorporating their AI technical capabilities into a more functionally capable process. There’s nothing wrong or ineffective to use AI, it’s a tool in the box that can be highly effective. However, even without AI, Facebook or other platforms could have already have competently addressed most of their abuse problems. They may not know how they could have done this, but that doesn’t mean it could not have been done, can’t be done, or should not be done.

The value for Intel to provide a solution that incorporates not just their AI capabilities but provides a richer and more capable set of tools for solving the problem is that their business benefits. It’s the reason that they are pursuing AI tools now. Making your tools more useful and productive only makes practical sense. A key to a more productive toolset is to leverage its strength into a process methodology that goes beyond just an AI environment. Oddly, while the platform providers haven’t seen the full opportunity yet; there’s nothing that prevents Intel from both recognizing it and then providing it.

This situation is similar to a speech recognition problem that occurred about 30 years ago where speech recognition technology was emerging as a solution where its application would be viable and productive. At the time, the technology was being considered for an application that it just wasn’t ready for and was going to be returned to a research domain to await further advancement. Before that decision was made, I stated that the state of art technology was ready for use. In fact, for a more beneficial application than the one it was being considered for. When challenged, I simply said: “I can prove it.” I believe the same is true of the state of the art for AI in being productively used to deal with any number of problems that are present in the social media platform environments. All that’s required is to understand how to incorporate the AI capabilities into an operational process. It will improve current levels of performance in handling various abuse conditions, do it far more cost effectively, and provide for additional revenues to those platform and service providers.

I am sure that someone will get there eventually. It’s a normal progression of technology versus need. So, why wait for the eventual progression; why not just achieve it?

Respectfully,

Comments